Programming

Programming

This website is mainly about Dance, but it's also about me, so I couldn't leave out a main area of my life — not just something which has provided me with virtually all my income, but something which I love doing whether I'm paid for it or not.![]()

Durham

I've always been a great science fiction fan, and fascinated by computers. Maths was my best subject at school: specifically Pure Maths rather than Applied Maths which I rather looked down on. As a teenager in 1961 I watched the television serial A for Andromeda written by Fred Hoyle (a famous scientist as well as a science fiction writer) and John Elliott, a novelist and television producer. I loved it, and it cemented my interest in the amazing things computers could do. I went to Durham University in 1964 to read Mathematics: there were no Computer Science degrees in those days. One of our lecturers thought it was scandalous that people doing a General Arts degree were given some lectures on computer techniques whereas the Honours Maths students were not, and said that some of his exam questions could be answered using computer programming. So I taught myself to program in Algol, an early high-level language. The computer was an Elliott 803 which had (if I'm remembering correctly) 64K of main memory. These days people would say “Oh, you mean 64 megabytes”. No I don't! Nobody had heard of megabytes in those days.You typed your program on a teletype keyboard which produced a paper tape (upper-case only). You checked it very carefully. Then (when you were allocated a time slot) you went into the computer room and loaded the Algol compiler from magnetic tape. You fed your program into the paper tape reader. If the compiler hit an error the reader would stop and the computer would display “Error nnn”. You made a pencil mark on the paper tape to show the position of the error and got out the Algol manual to see what this error message meant. Then you had to copy the paper tape onto a new tape, make the correction at the right place, and copy the rest of the tape. Eventually the program would make it through the compiler and would actually start to execute — further corrections would undoubtedly need to be made.

I can't remember whether it was at school or university that I was given some careers advice. What options were open for a mathematician? “Well, you could become a school-teacher”. Not a chance! “Or an actuary”. “What's that?” “It's working out when people are going to die, for insurance companies”. That didn't sound a whole lot of fun! “Or you could become a computer programmer”. So really there was no decision to be made.

We had two very good lecturers in my first year, and I came top of the entire year in the exams. Then they both left, and my enthusiasm fizzled out. While I was at school my father asked me “What use is this stuff you're learning?” and I replied “It doesn't have to have any use — you study it for its own sake”. But in my second and third years at Durham I would ask the lecturers at the end of term, “Is this any use?” “No, none at all”, they would reply cheerfully; it didn't even occur to them that this might be a criticism. I was very glad to get out into the real world after three years of that — not just to earn some money, but to do something useful! And I've never used any of the maths I studied at university.![]()

Rolls-Royce

I tried for a job at IBM, but they turned me down. So I got a job with the Rolls-Royce Aero Engine division in Derby where they taught me PL/I and I taught myself S/360 Assembler. PL/I was a language created by IBM with the intention that it would include the best bits of Algol, COBOL and Fortran plus some other unique features, and once everybody had switched over to it IBM could stop supporting their COBOL and Fortran compilers. The word went out to the salesmen: push PL/I at all your installations. But of course that didn't work. If your company had dozens of COBOL or Fortran programmers and hundreds of live programs, you weren't going to retrain or fire all those programmers and rewrite all those programs in PL/I. I expect the programmers said “COBOL/Fortran may not be perfect, but we know it inside-out and we're so used to its shortcomings that we program round them without even noticing”. So IBM ended up with three compilers to support rather than two, and eventually they told the salesmen to stop pushing PL/I. The System 360 computer range used punched cards, which was a step up from paper tape as you could just retype the card with the error instead of making an entire new tape. However there was a very real danger of you or somebody in the computer room dropping the deck, so the last 8 columns of an 80-column card would contain a sequence number. Again it was upper-case only.

The System 360 computer range used punched cards, which was a step up from paper tape as you could just retype the card with the error instead of making an entire new tape. However there was a very real danger of you or somebody in the computer room dropping the deck, so the last 8 columns of an 80-column card would contain a sequence number. Again it was upper-case only.

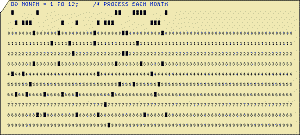

Here's an example of the humble punched card — once used in their millions, now a historical memory. Why do you think the PC display under DOS was 80 characters wide? Such was the weight of tradition that everyone knew a line of text should be 80 characters, even though no PC had ever seen a punched card! And it's still that width! Go into the command prompt and start typing — you'll find that after 80 characters (including the directory name stuff at the start) it wraps around to the next line.

After about a year my manager called me into his office — his manager was also there — told me they were giving me a rise and asked what I thought. Unfortunately I couldn't remember how much they were currently paying me, tried multiplying my weekly pay-packet by 52, or maybe it was multiplying my monthly pay-packet by 12: either way I couldn't do it in my head! Then his manager asked me what I thought of Rolls-Royce. “Do you really want to know?” I asked him. He assured me that he did. “Well, I think the place is a shambles!” I gave several examples of why I had come to this conclusion. He was quite taken aback, and eventually said rather defensively that he didn't think they were any worse than many other companies. That might well have been true, but it was the first company I'd ever worked for, and Rolls-Royce was one of the top names in aeroplane engines as well as cars — I expected something better! The company wasn't too well thought of by the locals either. They referred to it as “Royce's”, because Royce was the engineer, the technical man, whom they had respected; Rolls merely put up the money. If a manager transferred there from another company, the common expression was, “He's retired to Royce's”. I've since read that poor middle-management is the curse of British industry, and I can well believe it.![]()

IBM

After 18 months I was fed up with Derby and Rolls-Royce, and wanted to try for IBM again, in their Development Laboratory at Hursley, near Winchester on the south coast. My father had a contact in IBM and asked why I had been turned down before. The answer came back: “He did very well in the aptitude test, but we could hardly get a word out of him in the interview”. So this time I made up my mind to be as extroverted as possible. I got the job, though I learnt a couple of years later that there were concerns that I was very quiet! They must have lost out on a lot of good people — a programmer doesn't need to be loud and extroverted!I worked in the PL/I Language Group for a few months — it was all very abstract and theoretical, not my thing at all. So I asked to be moved to the team who were building the PL/I Optimising Compiler, and there I flourished. The compiler was written in Assembler to produce PL/I, both languages that I knew well. I was warned there wouldn't be much to be done as the compiler was due out soon. But even IBM is no good at estimating — we passed two internal deadlines and came up against an external one. Almost all the team worked overtime every Saturday and Sunday morning for a year, and I earnt enough money to buy myself an expensive electronic organ! Tony Burbridge the manager wasn't paid overtime, but he came in to make coffee for the team, which I'm sure earnt him a lot of respect.

I stayed at IBM for 5½ years. In many ways IBM was a very good employer, but lots of minor annoyances built up, usually involving petty bureaucracy. One example: for some reason there was a shortage of something and it was decreed that we would only be allowed half a cup of tea or coffee when the trolley came round — but we would still have to pay the full price. You've no idea the number of man-hours that were wasted with people complaining to each other in the corridors! No doubt the catering manager felt that he still had to meet his sales target, but that's so short-sighted. If instead he had announced “We're sorry, you can only have half a cup today, but we won't charge you for it” there would have been tremendous good-will and people would have worked that much harder. But I had discovered the “competing departments” syndrome — individual departments of a company have their own motivations which might well be detrimental to the company as a whole — this seems to apply everywhere. For instance there was a Testing department, deliberately kept separate from the Programming department so that they couldn't be influenced by the Programming managers, but of course there was plenty of communication at the bottom level. The Testing Manager sent out a memo to everyone in the Programming department saying that they were not to contact members of the Testing department because they were far too busy. The Programming Manager, who had a bit of common sense, replied with a memo saying that members of the Testing department were still welcome to contact the Programming department, even though they were also very busy.

Another reason for leaving IBM was that there didn't seem to be any single women where I was living (Chandlers Ford, basically a dormitory town for IBM), so in 1974 I moved to London to work for an agency (a “body shop” as they tended to be called disparagingly), thinking that they would send me out to lots of different companies.![]()

The Stock Exchange

In fact they sent me to The Stock Exchange where I worked on and off until around 1992. This was mainly PL/I programming, though I also did plenty of training.If you weren't involved in computers then, you've no idea what it was like. You would get one run of your job a day, two if you were lucky, so desk-checking was absolutely vital and you needed to be working on several different programs simultaneously. The program specifications were written by Systems Analysts who were considered far above the lowly programmers who had to implement the specification. My grandmother knew nothing about computers, but she still told people I was a Systems Analyst because she knew that sounded better. On one of the programming courses I later ran at The Stock Exchange was a Systems Analyst who loved programming. “Well why don't you ask to be transferred back to a programming department?” I asked. He was horrified. “I couldn't do that! What would people think? What would my wife think?” And the specs were so bad! Sometimes they read like COBOL programs. “Add this to that. If this is greater than that, go to step 7…” and so on — no explanation of what it all meant, just a list of instructions for an idiot. The first time reading a new specification through, it meant absolutely nothing to me. This used to worry me but eventually I accepted the fact, and later when I was lecturing I would reassure people by telling them this. The second time through I picked up a few things that started to make sense. Then I would write out my understanding in my own words. In fact when I'd finished the program I'd sometimes rewrite the spec, because by then I knew what it was supposed to mean. Sometimes I would question the Analyst who had written it. This was considered revolutionary — he was an Analyst and I was a mere programmer. But to me it seemed common sense. On one occasion I remember asking what I should do if a particular value didn't appear somewhere. “Oh, but that can't happen”, he said. Obvious to him maybe, but not stated anywhere.

Again I came up against the “competing departments” syndrome. The operators had an unspoken belief that they could do their job so much better if programmers didn't keep submitting jobs which would crash after a few seconds, usually after the operators had had to mount three disks — take each one out of its cabinet, put a cover on it, carry it over to the disk drive, put it into the drive and remove the cover. They didn't consider the fact that their sole purpose was to run the jobs which the programmers had set up. The programmers felt that they could do their job so much better if it weren't for the users, ignoring the fact that their sole purpose was to write programs for those users. On one occasion my manager told me that I mustn't sort out problems for other teams, just get on with my own work. By this time I had a reputation as someone who was good at fixing bugs and reading dumps — print-outs of main storage at the moment the error occurred — and people would often come to me for help. I said “But we're all working for the Stock Exchange, and I can often solve the problem in a few minutes whereas they would struggle with it for an hour or more”. But he was concerned about his deadlines, not the big picture. I told him firmly that it really wasn't taking up much of my time and I would continue to do it. The worst case I came up against was a group called Document Control. I was in charge of a small team writing a suite of programs, so I asked for copies of the specifications. “No, you can't have those — you're not on the circulation list.” In vain I explained that my team had the job of writing these programs. To them they were not programs to be written; they were documents to be controlled. I said that in that case I would have to photocopy somebody else's. They were horrified! “You can't do that — you wouldn't get the updates.” I pointed out that it would probably be my team giving the updates, since we were the ones working on the specifications and if there were corrections of clarifications to be made they would come from us. This meant nothing to them. I went ahead and did the photocopies.![]()

Lecturing

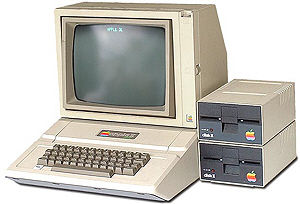

In 1978 Mike Kerford-Byrnes, the Systems Programming Manager at The Stock Exchange, asked me if I would be interested in developing and presenting a 2-week PL/I course for Infotech, a training company based in Maidenhead whom Mike also lectured for. I took up the challenge, gave the first running of the course at The Stock Exchange, and subsequently ran it many times for Infotech, as a result of which I picked up several of their other courses to run. Despite never having been on a public speaking course I seemed to be good at lecturing. I think part of this was down to me being entertaining — something I learnt from Peter Jenkins as mentioned in my Caller's notes — and partly because I asked the students lots of questions rather than spoon-feeding them the answers, and gave them lots of exercises, sometimes with them working individually and me going round seeing what they were doing and questioning them, sometimes as a group with me at a whiteboard (or more likely a blackboard) and individuals adding a line of code at a time or disagreeing with the previous person's line. It's so easy for students (and the lecturer) to think they've understood something, and then putting it into practice reveals that they really haven't. One of the team leaders at The Stock Exchange observed that my courses covered much the same material as other lecturers', but people seemed to remember more from mine. In July 1979 I became a full-time freelance lecturer on programming and other computer-related subjects. I bought my first computer, mainly to hold my course notes — an Apple II (long before the days of the Apple Mac). Read about it here. The motherboard had 48K of RAM and came with the BASIC language which I knew I wasn't going to like, so I also bought the Pascal Language System with a card which increased the memory to 64K — the maximum possible since the CPU held addresses as 2-byte values, though a trick called bank-switching enabled you to get a little more. So it had the same amount of memory as the Elliot 803 on which I had started programming, but instead of needing a large room with air conditioning it sat on my kitchen table. Actually it did tend to overheat, so I built a raised wooden platform with breathing space around it which fitted on top of the case and held the monitor. The system was completed by two 5¼" disk drives. Pascal is based on Algol, so I had no problems with the language. I wrote a word processor to print out my course notes (whereas Infotech were still using typewriters for their hand-outs). I also taught myself 6502 Assembler and started writing a music editor and player which took up an enormous amount of time and was never finished. Looking back on it, I very much wish I'd spent my time writing musicals instead! (Read more about my Writing.)

In July 1979 I became a full-time freelance lecturer on programming and other computer-related subjects. I bought my first computer, mainly to hold my course notes — an Apple II (long before the days of the Apple Mac). Read about it here. The motherboard had 48K of RAM and came with the BASIC language which I knew I wasn't going to like, so I also bought the Pascal Language System with a card which increased the memory to 64K — the maximum possible since the CPU held addresses as 2-byte values, though a trick called bank-switching enabled you to get a little more. So it had the same amount of memory as the Elliot 803 on which I had started programming, but instead of needing a large room with air conditioning it sat on my kitchen table. Actually it did tend to overheat, so I built a raised wooden platform with breathing space around it which fitted on top of the case and held the monitor. The system was completed by two 5¼" disk drives. Pascal is based on Algol, so I had no problems with the language. I wrote a word processor to print out my course notes (whereas Infotech were still using typewriters for their hand-outs). I also taught myself 6502 Assembler and started writing a music editor and player which took up an enormous amount of time and was never finished. Looking back on it, I very much wish I'd spent my time writing musicals instead! (Read more about my Writing.)

I also discovered that technical manuals could be fun! The Apple manuals were so different from the IBM manuals, and that dramatically affected my own writing style — for the better, I strongly believe.

I've always felt that programming isn't just about working with machines, it's also about working with other people — managers, clients, other programmers. I realise that being a good programmer requires a certain kind of personality. I don't know whether I'm very pedantic because I'm a good programmer, or a good programmer because I'm very pedantic, but you certainly need the ability to think as a machine thinks rather than as a human being thinks. However there's more to it than that. In the old days programmers could get away with anything because it was such an arcane art — they could wear scruffy jeans and T-shirts while the rest of the company was in suits. Much of the mystique has now gone — the manager is likely to say “My 10-year-old son can do programming — what's so special about you?” But programmers can still have the attitude that their computing skills are everything, and that's just not true. Let me give you some examples.

While I was at IBM they regularly invited a few students to work in a team for a period, to give then a taste of what real-world programming was about. My team was allocated a young man called Jonathan. He was a real whizz-kid, and everybody hated him! I was put in charge of him, possibly because I was perceived as a bit of a whizz-kid — though I was nowhere near as bad as Jonathan. He was extremely bright. Usually when I came up against someone who might have intimidated me, I would think “Bet he can't play the guitar though”. Jonathan could! He was due to go to university and he hadn't decided whether to read Music or Mathematics (he'd been offered places in both) — that's how bright he was. But he had no concept of (or interest in) how he came across to other people. If he found an unoccupied terminal in the terminal room he would log the previous user off and log himself on — they'd probably just gone to the toilet or the coffee machine. He wouldn't do the work he'd been assigned to do — that was too simple or too boring. I don't think I made any impression on his attitudes, but at least I was a buffer between him and some of the other team members who would cheerfully have strangled him.

I ran a programming course for Texas Instruments in Bedford. One of the students had the catch-phrase, “Colin, I'm confused”. Technically she was competent rather than brilliant, but she got on well with people, she was fun, and everybody loved her. All the departments were saying “When she's finished her training, we want her”. And there was a much brighter man, but he came across as arrogant, not willing to listen, too full of himself. I had a go at him a couple of times, contrasting him with this woman, trying to make him understand that technical ability wasn't enough and she was going to do far better than him in the long run. I don't know whether I got through to him, but I believe it was part of my job to teach the students that being a programmer in a company means being part of a team, and that requires quite different skills.

The Stock Exchange took on a group of graduates each year, and I did their training. I remember trying to explain something in different ways because they weren't getting what I was trying to say, and one woman suddenly said, “Colin, you really care whether we understand things”. I was taken aback by this, and said, “Well of course I do — it's my job to explain things so that you understand them”. She said that for three years at university she'd been “taught” by lecturers who had no interest in students and didn't care whether they understood or not — I imagine they were there because they wanted to do research, and I'd suffered from one of those at Durham.

The graduates were actually taken on before their results had come out. One year I was taken aside by the leader of the Training Department who said of one of the trainees, “We've just heard from her university — and she's failed her degree course. She hasn't told us”. I immediately said, “It doesn't matter. She's doing really well in the training, and she gets on well with people and will be good at working in a team. Forget about it”. I'm glad to say they took my advice.

I also did some lecturing abroad, which was very good for me — it's so easy to assume that the way things are done in your country is the only possible way. I had some particularly interesting experiences in Stavanger in Norway, and they're still fresh in my memory.

I can't recommend Stavanger in December — the British equivalent is perhaps Aberdeen though I've never been there so I might be doing Aberdeen an injustice! On one occasion I didn't think I would even get through customs at the airport. I was taken to an interview room by a belligerent customs officer. “You have no papers to say that you can work here”. I explained that I was employed by a training organisation in England — I wasn't directly working for a Norwegian company. “And what are these?” he asked, unpacking my box of materials suspiciously. “They're manuals to go with the course I'm teaching”. “So you're selling them”. “No, I'm not selling them — they're just part of the course material”. “Who is your contact in Norway? We need to speak to him.” I had a name and an office phone number — but this was a Sunday evening and the office would be closed. He deliberated over this; I can't remember whether he went off to speak to somebody else, but it all took a long time. Eventually he just said, “You may go”. No explanation or apology — but I wasn't sticking around to wait for one! I returned to Norway the next week armed with a letter from the training organisation in England explaining the set-up — and nobody questioned me.

The hotel was very expensive but I didn't like it at all. It was assumed that if you were there for an evening meal you would be there for the whole evening. Someone would show you to a table — and leave you. A long time later someone would come and give you a menu — and leave you. Then someone would take your order. After you'd finished the main course they would clear things away — and leave you. Eventually they'd come back and ask whether you wanted a dessert — bring the dessert menu — and leave you. I got wise to this rapidly! When I went in I would pick up a menu from the pile, so that by the time I'd been taken to a table I was ready to order. And when they cleared away the main course I would order my dessert. It still took a long time though, and I'm glad I wasn't paying for the food myself.

The restaurant was very dimly lit, which they probably thought was sophisticated, but at breakfast time it was simply dingy, with half-asleep customers stumbling around trying to find what they wanted. And it was self-service: I know that's the custom now in many hotels in England, but it wasn't then, and when I'm half asleep in a foreign country I would really prefer someone to take my order and then present it to me. I didn't want all the cold meats and cheeses — I wanted toast and jam and a decent cup of tea! I found some fruit compôte which I pretended was jam. The first tea-bag I chose was something herbal, but I could live with it. The second was disgusting — I dumped that one and finally found some tea that I could enjoy.

At the office building I was told by an Englishman on the course who had lived there for some years, “Norway is a much more foreign country than France or Germany”, and he was right. He said that all the social life centred around their homes, and since I didn't have a home there I wouldn't see any social life. And he warned me, “You won't get anyone to do anything after 4o'c — they just won't concentrate on anything you say”. It was true: everything closed at 4o'c. On one occasion I took a little while tidying my things up and found it difficult to get out of the building — the receptionists had all left and there was an air-lock style double door with no-one at the desk to press the two buttons. Having got back to the hotel I ended up wandering round the streets — everything was closed except a kiosk selling sweets and so on. And at the end of the course I said “I'm going to stick around for as long as you need me, so you can do several runs of your program and I'll help you debug them”. No-one was interested. Now if I'd been on a course run by a foreign lecturer and he'd made an offer like that I would most certainly have taken him up on it — but maybe I'm unusual.

On one occasion I decided to stay in Stavanger over the week-end in the middle of the two-week course. “Hire a car and see the area”, said my employer in England. In a foreign country, with a strange car, on the wrong side of the road, in snow and ice? I don't think so. I went on a cruise round the fjords — and I was so bored I couldn't wait for it to finish. I'm not a good tourist, particularly on my own.

Another interesting experience was lecturing for NatWest Bank in Central London. I don't know what they're like now, but they were a very old-fashioned organisation then. Another lecturer who had done several courses there told me about the job. At the end, very embarrassed, he said, “Oh, and we'd like you to wear a suit”. “I don't have a suit”, I replied. (I usually lectured in a sports jacket, but that obviously wouldn't be good enough for NWB.) “No, I thought you might not”, he said nervously. “If we pay for a suit, would you be willing to go out and buy one?” So I went to Oxford Street with my girl-friend and we chose a suit. The silly thing was, as soon as people got to the bank they took their jackets off — I was the only person actually wearing a suit.

The lecture room was laid out as a school classroom with several rows of desks. I'm not a school-teacher, and I like interaction between the students, so I told them to arrange the desks around three sides of the room leaving a central area. They were very dubious about the idea — “We're not supposed to do that”. I told them I was running the course my way, and they did it. When I came back for the second week I found that the desks had been put back in straight rows — so we moved them again. I had a meeting with the manager who said that he wanted me to set an exam for the students — and he didn't expect them all to pass it. In fact I made it much too hard — I had no experience of such things — but I was horrified by the whole management attitude. I was told by the lecturer who had asked me to buy a suit that the first time he'd lectured there the management had walked into the room while he was teaching and started telling the students things without any sort of apology. Good thing they didn't try that on me — I'd have told them to get out!

One of my biggest challenges was running a course for the American Army in Heidelberg in Germany, when they had been up all night on manoeuvres. I opened all the windows, moved about the room much more than usual (and I was suffering from a really bad back, so that was a challenge in itself), and was extremely animated. No-one fell asleep.

I always start a course by asking people their names, their background, and what they expect from the course. On several occasions, people have said, “Well, nothing really, we covered all this on our Computer Science course at university”. And at the end of the course they've said, “I was wrong: I learnt a lot”. They knew plenty of obscure things that I didn't, like compiler hashing algorithms — but ask them to write a simple commercial program involving reading a file of records, doing a simple calculation and printing out a report, and they didn't have a clue. I decided Computer Science degrees were no more use than my Maths degree!![]()

Thorn EMI

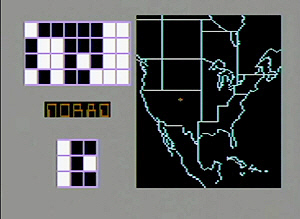

In 1983 I decided I needed a break from lecturing, and that I would like to work on micros instead. (At that time computers consisted of mainframes, such as the IBM 360 and 370 range, midi systems such as the PDP 11, and micros such as the Apple II, Commodore Pet, BBC micro and Sinclair Spectrum.) I took a job with Thorn EMI in their Computer Games department in Soho, on the Atari computer which used the same 6502 chip as the Apple II, and I knew 6502 assembler language. That was a very different experience. They were all half my age. There were no specifications, no documentation, no looking at anybody's code to see how it might be improved, no maintenance… People would play the games and perhaps make suggestions. They were all very keen game players — a new game would appear and they'd spend ages playing it, whereas I just wanted to get on with my programming. The Atari could only display a small number of colours, but it had a clever feature called “Display list interrupts”. While the dot had reached the end of a line on the monitor and was jumping back to start the next line, you had a short interval in which you could change these colours, so you could use a different set of colours in different horizontal areas of the screen. That's how I got the red “NORAD” logo (though it doesn't look very red in this picture). When the dot travelled up from the end of the bottom line to the start of the top line you had a much longer interval, and that was when you did all the calculations and updated the RAM representing the screen so that the next picture would be displayed. My version of “Computer War” (based on the film “WarGames”) sold well and was described in one review as “Thorn EMI's best ever”. But the company had no idea how to market video games — we were put into the section that made videos, but that was a totally wrong choice — and because some top manager somewhere was ill they never signed the agreement that we could call the game WarGames, so it had to be called Computer War and the word “WarGames” had to be in a substantially smaller font, which I'm sure reduced sales.

My version of “Computer War” (based on the film “WarGames”) sold well and was described in one review as “Thorn EMI's best ever”. But the company had no idea how to market video games — we were put into the section that made videos, but that was a totally wrong choice — and because some top manager somewhere was ill they never signed the agreement that we could call the game WarGames, so it had to be called Computer War and the word “WarGames” had to be in a substantially smaller font, which I'm sure reduced sales.

You can watch a YouTube video of someone playing the game. He doesn't know what he's doing — he doesn't slow down when approaching the missile so he always overshoots, and he doesn't know that when he destroys a missile he's supposed to solve a puzzle with the flashing lights to reduce the DEFCON — and I don't know why the second half of the video contains the word “Rating”, which is certainly not part of the game, rather than my nice ending sequence. I've now found a better example at youtube.

In 2019 I was contacted by the Atari User Group and asked to talk about my experiences writing Computer War. You can hear that at ataripodcast.

In 1985 I returned to The Stock Exchange as a Senior Programmer, and at the end of that year I returned to freelance lecturing full-time.![]()

PC programming

When IBM entered the home computer market in 1981 I thought they'd left it too late — there were so many established competitors. But IBM standardised the industry, and also (unlike Apple) allowed other manufacturers to build and sell IBM PC's (now simply known as PC's). I bought one in December 1988 and a company called Borland were selling Turbo Pascal, so naturally I started using this. By sheer chance I came across someone who had a disk which could be accessed by both an Apple II and a PC, so I transferred a number of programs and other files. Borland also sold something called the Turbo Editor Toolbox which was the Pascal source code for a text editor, so I seized upon this and expanded it considerably to produce my own word processor, running on DOS 3.3. I know most people think it's weird to write your own word processor, but it taught me a lot and meant that it would do exactly what I wanted. Because I was in the Borland Developer Group I was then invited to a demonstration of their Paradox database. I had no desire to get involved in database programming, but I wanted to go along to see what it was all about. The presentation was a failure — they couldn't get things to work. Rikke Helms, the Managing Director of Borland UK was hugely embarrassed, and said “Don't worry — we'll send you all a free copy of the database”. I didn't believe her for a moment — it was selling at £600 — but a couple of weeks later a large box arrived in the post containing the software and about 8 manuals covering different aspects of it. So I became an expert on the Paradox database. I wrote a Membership System for EFDSS to replace the unbelievably slow one they had had till then. I was told that they needed to put in the details for two or three members and then for it to chug along for twenty minutes while they went away and did something else. I assured them that this would not be necessary. I also got plenty of work with Clarkson Research Studies, a company in the City of London who had a number of important systems running under Paradox and only one person who had written them and knew how to use them. I don't know what happened, but they fired him, and were then desperate for me to sign a contract that I would work for them two days a week. I gradually tidied up the scripts, and I had written some code in my word processor which reformatted them to a readable state — that's where writing your own editor pays off! There was one script which couldn't possibly have worked — it was referencing a file that didn't exist. I told my manager this. He suggested that maybe the writer would correct the file name, run the script and then put the wrong file name back in again, to give himself job security. I'm happy to say that this would never have occurred to me. I also worked one day a week for the International Planned Parenthood Federation in Regent's Park (the only job I've had which I could actually walk to), writing a system to be used by birth control clinics all over the world.I was an early adopter of email — CompuServe was the only ISP in the country at that time. It had a number of message forums, and I could ask questions and find information on its Computing forum. The manual extolled all the system's virtues, and right at the end said, “You can also access the World-wide Web”. That's how unimportant they thought the web was. Eventually they realised their mistake — by this time the Borland support group had moved off CompuServe to the Web — and converted their forums to web pages, but they were too late. I'd had enough of CompuServe and there were many other ISPs around by then, so I switched. I don't remember when I set up my website, but it was certainly in the days of DOS, which had a 3-character limit on file extensions — that's why the pages are .HTM rather than .HTML.

At some point I made the jump from DOS to Windows 3.1. I'd tried to steer clear of Windows, which was much slower than DOS, but I could see that was the way the world was moving. And Borland had come up with a system called Delphi which used Pascal as its language, so again it was the obvious choice for me. I started with Version 2 in 1996 and have been using it ever since. I love Delphi which is far superior to Visual BASIC, though such is the dominance of Microsoft that Borland couldn't compete and eventually sold it off. I'm still (in 2020) using Delphi Version 6 which came out in 2001. Younger programmers will be horrified — in computer terms that's prehistoric — but I don't care! It does everything I want, and does it very well. I did buy a later version, but having tried it I decided to stick with what I had. As with Turbo Pascal it came with six or seven manuals explaining various areas of the system. And naturally I decided to write my own editor! I pulled in some of the code from my DOS Pascal word processor and have been adding to it over the years. I wouldn't call this one a word processor because it doesn't deal with page breaks, page headers and page footers, but it's something I use all the time — I'm using it now to write this web page — and I'm constantly improving it. Sometimes the improvements are so good that it stops working: I keep lots of back-ups and may be forced to look back and find out whatever change it was that I really shouldn't have made!

It allows me to edit the source of my website using a number of RTF (Rich Text Format) files and generate the web pages from this. Most people would think this a weird way to do things, but it works for me. The program is called WebEdit, but it does a lot more than edit and reformat web pages. It reformats Pascal source — I copied that from my DOS word processor. It reformats and validates HTML, CSS, Visual BASIC, JavaScript, PHP and some other file types. It edits, prints and plays ABC music files. It's an FTP client, so I can upload my web pages to the server and see at a glance which files on my hard disk don't match those on the server. And there are lots of other odd features, some of which I use only occasionally.

Clarkson Research wanted a graphical front end to their shipping database and I put in a bid. I heard nothing from them, so eventually I contacted their Managing Director, Martin Stopford. He said they didn't believe I could do the job for what I'd quoted — a larger company had quoted twice as much. I assured him that I could, so he went back to the other company, sent them my specification and said, “We've been quoted this much — what is there in your quote to make it worth twice the price?” They came up with a few things that I wouldn't have done, but Martin was looking for a simple system rather than one with all the bells and whistles, so he accepted my quote and was very pleased with the result — he's still telling people how good it was, and that it came in on time and on budget.![]()

Dance Organiser

There hadn't been any real overlap between my computer side and my dance side at this point, except for the time when I'd just finished 6 weeks lecturing for a company somewhere south of London and told a room of surprised dancers, “There's a 4-byte introduction”, but that was about to change. At Sidmouth one year I lost all my dance cards. I'd been calling a children's dance in the morning (great fun but exhausting), gone to a couple of shops, got back to where I was staying and suddenly realised that I didn't have my case. I hurried back to the shops, but no-one had handed it in. That evening I called an American dance with Blue Mountain Band without any instructions. Their leader Rick Smith had a reputation for giving callers a hard time, but he was wonderfully sympathetic to me on that occasion. The next morning I was contacted by the festival team and told that my case had been handed in, so I rushed over to collect it, but by then I had realized that I needed some kind of backup. Photocopies really weren't good enough; I'd have to cut them up and stick them onto cards (or write out the cards again) and I would have to remember to copy the card every time I wrote a new one or amended an old one. That was when I made the decision that no matter how much work it would take (and believe me, it was an awful lot of work) I was going to type out all my dance cards and hold them on a database, so that if necessary I could print out the whole lot again. I started a program to let me enter the cards in a consistent format and print them out on 3" x 5" cards the way I wanted them. This was a DOS Pascal program using the Paradox database. It took a few years to do all the data input, but in the process I often modified the wording based on my experiences of calling the dance and discovering what people had difficulty with — sometimes the order of the words can make a tremendous difference. And once that was all done I had the wild idea of writing a computer program which would plan my dance programs for me! I knew that this was a major job, and I might never be able to specify what was required, but I started writing some code. I knew how I planned an evening, so why shouldn't I program the computer to do the same job and then I could just press a button and it would produce a program for an event, taking into account what sort of event it was and what I had called before for this group? I didn't get very far with this, but I realised that I had an excellent way of holding the programmes for future reference, and also automating the recording of how many times I'd called each dance, which was done by ticks on pieces of paper at that time, and instantly telling me which of the dances I had called before for this group. Later I converted this to a Delphi program running on Windows. I changed the name from “Dance Generator” to “Dance Organiser” and it has been my constant companion ever since. As with WebEdit, I'm always updating it; it now copes with recorded music (though I hardly ever use that) and I have a copy on my lap-top which I call from (though I always have the case of cards as back-up unless I'm calling abroad).Program design

If you're not a programmer, don't switch off. I can explain this so that you understand it — I'm good at that!When I started programming there were no design methods — you just threw the code together as it occurred to you. Some people still program like that, and with modern technology you get immediate gratification. You can write a few lines of code, run them, and produce a nice heading box in a snazzy font in six different colours, and you think, “Wow, I'm a programmer”!

Structured programming was a marked improvement on this. It's sometimes described as “Programming without GOTOs” but that's a negative way of looking at things, like saying a Christian is someone who doesn't do this and doesn't do that. Structured programming says that a program should be constructed from combinations of three basic elements.

- Sequence: Do one thing after another. Open the file. Read the first record. Print a heading line.

- Selection: Do one or more things depending on some condition: Add Total to Sales or Add Total to Refunds or print out an error message.

- Iteration: Go round a piece of code repeatedly until some condition. Scan this string of characters until you find a numeric character or hit the end.

Then in 1975, Michael Jackson (no, not that Michael Jackson) published a book called “Principles of Program Design” which actually showed you how to design programs (though the book is rather hard going). I went on a Jackson Structured Programming course at The Stock Exchange with the attitude of “I'm an experienced programmer — he's not going to teach me anything” and I rapidly changed my mind! The lecturer showed us a flowchart of a program which I had written many times. Suppose you have a company with a number of departments, and the finance people produce a file — on punched cards, magnetic tape or whatever — giving the expenditure of each department on its various projects. The program reads this as an input file and prints out a Total line for each department. I've put a rough flowchart here, and I don't believe you need to be a programmer to understand it. The diamond-shaped box represents a decision — if the record you've just read is for a different department from the previous one, you need to print out the total for the old department and then print out the heading for the new department. But there are problems. If you're not careful, the first thing output is a zero total for a department with no heading. More importantly, if you just stop the program when the input ends (which I haven't shown — I said it was rough) you forget to put out the total for the last department, and I'm sure there are many programs with this bug still running today — a program to control spending never reveals that the final department is always over budget, and they won't be complaining! And then he told us why it was wrong, and gave the first rule of Jackson's approach: The structure of the program must match the structure of the data. This was a revelation to me. I'd never realised that data had a structure — it was just… data! But of course he was right. The input file consists of a number of departments, each having a number of transactions.

Then in 1975, Michael Jackson (no, not that Michael Jackson) published a book called “Principles of Program Design” which actually showed you how to design programs (though the book is rather hard going). I went on a Jackson Structured Programming course at The Stock Exchange with the attitude of “I'm an experienced programmer — he's not going to teach me anything” and I rapidly changed my mind! The lecturer showed us a flowchart of a program which I had written many times. Suppose you have a company with a number of departments, and the finance people produce a file — on punched cards, magnetic tape or whatever — giving the expenditure of each department on its various projects. The program reads this as an input file and prints out a Total line for each department. I've put a rough flowchart here, and I don't believe you need to be a programmer to understand it. The diamond-shaped box represents a decision — if the record you've just read is for a different department from the previous one, you need to print out the total for the old department and then print out the heading for the new department. But there are problems. If you're not careful, the first thing output is a zero total for a department with no heading. More importantly, if you just stop the program when the input ends (which I haven't shown — I said it was rough) you forget to put out the total for the last department, and I'm sure there are many programs with this bug still running today — a program to control spending never reveals that the final department is always over budget, and they won't be complaining! And then he told us why it was wrong, and gave the first rule of Jackson's approach: The structure of the program must match the structure of the data. This was a revelation to me. I'd never realised that data had a structure — it was just… data! But of course he was right. The input file consists of a number of departments, each having a number of transactions.

Instead of flowcharts, the Jackson method uses boxes to represent Sequence, Selection and Iteration.

Instead of flowcharts, the Jackson method uses boxes to represent Sequence, Selection and Iteration.

On the left is the data structure of the input file. The asterisk represents an iteration, and you use a little circle to represent a selection. File is an iteration of Department, and each Department is an iteration of Transaction. That's it! In a more complicated program you would combine two or more input and output data structures to produce a program structure. In this simple case it's just done by putting a verb before most of the names — you can often be more specific, but “Process” is a safe one. And because a box cannot be both a sequence and an iteration we need to add a few more boxes — these would probably have come from the data structure of the printed report actually. If you're looking for a name for such a box, adding “Body” is a standard option. That gives us what you see on the right. You then have to add the actual operations such as “Read a record” and “Add amount to total”, but that's really minor compared with getting the structure right. Yes, it's a loop within a loop, not just one big loop. Some people seem to think that's inefficient; of course it isn't. You're still reading the same number of records, but instead of the somewhat nebulous “Change Department” code we now have one obvious place to put out the department heading and one obvious place to put out the department total. And provided we put the right conditions on each iteration, it won't have the problem of not outputting the final department; it will even cope with there being no data in the file because it ran on Christmas Day, which I doubt that the previous version would have done.

We did a proper trial at The Stock Exchange: one team wrote a system using conventional methods and another team wrote a similar-sized system using JSP. At first the conventional team seemed way ahead — they were writing actual PL/I while the other team were still drawing those silly boxes and having endless discussions about them. But gradually they drew level, and when it came to actually testing the systems there was no comparison. The conventional team were constantly having to change their “design” to cope with things they hadn't considered in their rush to start coding — remember, many managers judged programmers on the number of lines of code written per day. The JSP team found plenty of bugs, but they were to do with missing out instructions such as clearing the totals, or perhaps putting an instruction in the wrong place; the structure of the program almost never had to be modified. And when it came to maintenance the distinction was even more clear-cut. The data structures and program structures formed part of the system documentation, and could be referred to by someone maintaining the program who hadn't been involved in the implementation.

As you may have gathered, I can be quite fanatical about the Jackson Method. When I was training each new batch of graduates, the first week was the Jackson Method of Program Design. Then I taught them PL/I, but I was constantly referring back to the design method. Later I ran this course many times for Infotech. And I still use the method today. I don't design entire programs this way (though a lot of it has seeped into my unconscious so I'm probably using it without being aware of it), but on many occasions when I've been stuck on a piece of code in WebEdit or the Dance Organiser I've thought, “What are you playing at? Draw a data structure!” Usually two or three minutes spent scribbling a few boxes on a piece of paper are enough to show me exactly how I should be coding it.

Of course there's a lot more to the Jackson Method than this, including backtracking and inversion, but I hope this has given you enough idea of what it's about to make you think differently about any programs you have already written or may write in the future.![]()

Bugs

I once read a stupid article asking why programmers put bugs into their programs, and pleading with them not to do it. All programs have bugs, including those from IBM and Microsoft. Each main release of the PL/I Compiler had to go through a lot of testing, whereas minor releases could get by with less. By the time the testing of the main release was complete we would have found and fixed any number of bugs, so we sent out the official release (as we were required to do) together with a minor release, and told the customers, “Don't install the official release — the minor release is much better” (though no doubt it was worded less baldly than that).Why do programs have bugs? It may be sheer carelessness — using the wrong variable or specifying Count < 10 rather than Count <= 10, but some are much more subtle. The worst kind of bug to find is the “combination of circumstances” bug: the program works fine until it's run on the first working day of the month, the first transaction of the run has an error, and the error code is non-numeric. Some of those bugs have been out there for years, and they may show themselves tomorrow — you just don't know. Here's one I know about from my IBM days, because I was sharing an office with the man dealing with it. One customer reported an error and sent us listings to show it — nobody else had hit it. Peter studied these for a long time. He tried hard but couldn't reproduce it on our computers, and I don't know how he found the problem. It turned out that the customers were using non-IBM disk drives — and they were faster than ours! Someone had written code that wrote out the contents of an area of RAM to a file on disk, and a fraction of a second later picked up some value from this area to use elsewhere. He shouldn't have done that, since as soon as the area had been written out it was finished with and was available to be changed by the operating system for some other use. But with IBM disks the value was still there as expected; using the competitor's disks the write had finished and the memory area had been updated before the program picked it up.

Usually finding the bug is the hard part; fixing it is easy. But if your program has been badly designed (and most of them were in those days — not actually designed at all) there was the ever-present danger that fixing a bug somewhere would introduce another problem somewhere else. Structured programming had not yet made any impact; when I first heard the suggestion that you shouldn't use GOTO statements I thought it was a joke!

After first release of the PL/I Optimising Compiler the group's focus switched from development to maintenance. There's a tendency to dismiss maintenance as a low-level job — a man in overalls with an oily rag going round wiping cog wheels and pistons — but that's totally wrong: maintenance is a much harder job than development. I learnt a lot as a maintenance programmer, though it's not something you'd want to do for too long. IBM had two awards: a “Special Contribution” and an “Outstanding Contribution”. I was assigned a phase of the compiler for which the writer had been given an “Outstanding Contribution” award (which in addition to the kudos was worth several hundred pounds) — and it was terrible code. We had a design point of (I think) 28K, so each phase of the compiler had to fit into that, and when I took it over this phase had 8 bytes spare. I was expected to fix the bugs in it and — even worse — the man who had written it was now my manager. The citation for the award described the module as “sophisticated”, which annoyed me until I looked the word up in a dictionary — remember those?! It had several meanings, none of which was complimentary: they included “not liking simple things” and (my favourite) “a plausible but false argument”. I would study the code for ages, try to write a flowchart or explain things in my own words, and eventually go to him and say “I can't see how this code could ever be executed”. He would stare at it for a long while and then say “Well, it must have been there for historical reasons” — in other words “It did something once, but I can't make head or tail of it now”. He was also good at suggesting a very specific fix for the bug — “Put in this test here and that test there” — but that was just papering over the cracks: the underlying design was still wrong and next month a slightly different program would bypass those tests and show up the same problem, so I would never do it his way. Eventually I stopped asking him, and we never had a good working relationship! I also knew that to make any headway I had to restructure the code, take out many of the GOTO's and make the whole thing more logical. We weren't working on decks of cards by then; the source code was held in a library on disk. But we couldn't update this directly! We had to produce a file of card images containing commands as well as lines of program, saying things like “Delete lines 1420 to 1490 and replace them by these two lines”. I ended up with a file which said “Delete the entire source and replace it by this new code”, and had to fight strong opposition to this approach. “But we won't be able to measure your productivity”, said management. I've never been convinced that “lines of code added per day” is any measure of productivity. In many cases I was removing chunks of code which did nothing, or combining two very similar chunks of code into one. Is this negative productivity? But I got my own way, and by the end of my time at IBM I had reduced the size by 2K while fixing numerous bugs and also putting in some improvements. Of course I didn't get an award — who would think of giving an award to a maintenance programmer?

The Testing department was separate from the Programming department, and you had to go a long way up the management hierarchy before the two branches met. This was deliberate, so that the Programming Manager couldn't put any pressure on the Testing Manager. They had hundreds of test cases — little programs which were supposed to compile correctly and execute to produce the specified results. We were shown the errors produced by these and of course we worked hard to fix them. But there was also a set of test cases which was kept hidden from us. At first I thought, “That's ridiculous — they've found a bug in the compiler and they won't tell us what it is!” But later I saw the sense in it. What we were doing was tailoring the compiler to the test cases, and the Testing team needed a separate measure of how reliable the compiler was. Each week we had a progress meeting, where Tony Burbridge the manager would show us a graph of test cases passed against time. Early on he said “I think we can see the beginnings of the familiar bell-shaped curve”, and I made myself unpopular by saying “Well, it looks like a straight line to me”. But gradually the curve of normal distribution began to appear and eventually he could announce “We have passed all 900 test cases” (or whatever the number was). Did that mean the compiler was bug-free? Far from it! There were thousands of PL/I programs out there in our customer base, and IBM had to persuade them to switch from the “F” compiler, which came free with the operating system, to the Optimising Compiler which cost $250 a month. We were swamped with APARs — an IBM acronym for “Authorised Program Analysis Report” which cunningly disguises the fact that it's a complaint about a bug.![]()

Languages

In the beginning was machine code. That's the language the machine itself (the Central Processing Unit) understands — a string of on or off elements called bits which you can represent as 1 or 0. On the computers I've used, 8 bits are grouped together into a byte. I could write a 4-byte string as 00110110101001010000001011111110 but I bet if I asked you to write it down you'd make a mistake somewhere! Leaving a gap every 8 bits makes it slightly more memorable: Here's a fragment of a machine code program for an IBM 360: 5860 B052 5B60 B056 4740 B640 5060 B05A. I wouldn't want to write in machine code, though certainly when I bought my Apple II some of the top games programmers did so.

The next level up is Assembler, which is much more readable but has a very close relationship to machine code. That same fragment of machine code might be identical to this fragment of Assembler:

S R6,OUTVAL

BL ERROR1

ST R6,TOTAL

In case you want to know, this code loads a binary number from field INVAL into Register 6 — a register is a special area where you can do arithmetic and many other things. It then subtracts the number in field OUTVAL from this. If the result is less than zero it goes off to some error code, otherwise it stores the result into the field TOTAL.

Needless to say, I would rather write in Assembler than in machine code, but you find at every stage of improvement there are programmers who will say, “Oh well, if you do that, anyone can be a programmer”. I feel that way about CMS's!

As computers became more powerful, high-level languages started to appear. In a high-level language the programmer usually has no idea what machine code is being produced, so the resulting program can be very inefficient, but it's much easier to write and it takes many fewer lines of code to achieve the same effect. And again no doubt some people said, “Oh well, if you do that,…”

When I started programming, and for many years later, the two most common languages were COBOL for commercial programming and FORTRAN for scientific programming.

COBOL was designed to be English-like and therefore self-documenting — people now criticise this decision but I can see the logic of it. For instance:

SUBTRACT OUTVAL FROM INVAL

or

SUBTRACT OUTVAL FROM INVAL GIVING TOTAL

whereas a scientist or mathematician would prefer something like

Dec(InVal, OutVal)

or

Total = InVal — OutVal

but it's likely that COBOL programmers were happy with the very restricted way they had to write their programs — it standardised everything and probably made it much easier for one programmer to debug or maintain another programmer's program.

FORTRAN (“Formula Translation”) is a language I know nothing about — read the Wikipedia article to learn more.

Algol and Pascal were created and used by academics, but I don't think they had much impact on the real world.

Much later, BASIC became important because it could be run on home computers with a very small amount of memory. BASIC is an awful language in my opinion, thrown together rather than designed, and originally programs were riddled with GOTO statements specifying a line number rather than a label, so when you were trying to debug a program you had to be aware that every line was a potential branch-in point. People forget that it stands for “Beginners' All-purpose Symbolic Instruction Code” — it was aimed at beginners, not experienced programmers writing complex programs. But it was where Bill Gates and Microsoft made their name when IBM installed their version of BASIC on the IBM PC, so later Microsoft produced Visual BASIC to run on Windows. It's still an awful language, but at least it doesn't have line numbers any more!

Then came C and C++ and a myriad of other languages, some of them general purpose, some written for a specific task. When I was lecturing I would say that I'd been born at exactly the right time: ten years earlier there would have been very few jobs for programmers, and by the time I retired computers would be programming themselves — you'd talk to the computer, tell it what you wanted to do, it would ask questions to clear up any ambiguities, and then create the program. How wrong I was! These days programming languages are much larger and far more complicated than in the early days. PL/I was criticised because there was too much in it — the language was far too large. And I knew the entire language — I was a world expert in PL/I. Now I look at C# running under .Net with its vast number of libraries of functions and I'm overwhelmed: there's no chance that I could ever master it.

I predict that in the future, after I'm dead, people will look back on this as the dark ages of computing. They'll be horrified at all the complexity of the bad old days!![]()

Websites

Clarkson Research paid a lot of money to a company to produce a website for them, but Martin Stopford wasn't happy with the company or the site and wanted to take it off their hands as soon as it had been tested and accepted. He got me to learn ASP — Active Server Pages, now known as Classic ASP — which was the Microsoft language used for all the server functions. He wanted me to take over the website, and his exact words were “I can almost promise you a lot of work”. I got virtually nothing. His department members wanted to do it themselves, and thought it was far too important a job to be subcontracted to an outsider, so he was outvoted.If you're not knowledgeable about websites, let me explain a few things. Many websites just contain static text — every time someone clicks on your page they see the same thing unless you have manually updated it. More complicated websites are dynamic — the user inputs some information and the results of a calculation or a database search are displayed. For instance if you use a search engine you get back a page of results, and that's a dynamic web page — it has been built up on the server and then sent down to your browser. For static websites I use HTML (Hypertext Markup Language — the standard language of websites since the web started) and CSS (Cascading Style Sheets — so that you can change the style of all your web pages in one place rather than having to search through all the pages for purple titles because you've decided you prefer blue ones). I also use JavaScript which is a sort of half-way house — it's a programming language but it runs in the browser on your machine rather than on the server so it's often much quicker.

For instance, if you click here I'll tell you how wide your browser window is.

For dynamic web sites I need programs to run on the server, so in addition to all this I use Classic ASP or its successor ASP.Net (coding in C#) and for database work I use Microsoft Access or SQL Server, though I'm happy to use other databases. I now also have a working knowledge of PHP and the MySQL database, and I've even dabbled in LibreOffice, a free open-source alternative to Microsoft Office which has its own (very non-standard) database system.

These days a high proportion of websites use a Content Management System (CMS) such as Wordpress, Drupal or Joomla! (the exclamation mark is part of the name, not my emphasis). These allow non-programmers to create and update websites without any knowledge of HTML, JavaScript, etc., and I have no interest in them — I'm a programmer and I like to get my hands dirty. As a result my websites tend to run much faster and the pages are much smaller than others. According to a survey in 2017 the average page size — including HTML, CSS, JavaScript, images, videos — was 3MB. That's three million bytes. I've removed CMS's from several sites, and they all run much faster now. I suppose I feel that working with a CMS is like calling a dance with recorded music. Some callers feel much safer and happier with recorded music because they know exactly what it will do every time, and they don't need to know how to deal with bands, but give me live music any day!

Maybe you would call WebEdit a CMS, but it's rather different. I hold the source of almost all the pages of my website in seven RTF files — one for the original part like this, one for Technique, one for Instructions and so on. This means I can edit this page with real bold and italic text, and I can use various dot commands (going back to the Borland Turbo Editor Toolbox which took the idea from WordStar) to do all sorts of clever things. For instance a .T2 command generates a horizontal line followed by an <H2> heading and a .BB command at the start of the page section generates the button-block with links to all these sections, plus a hover box for headings which are too wide for the button (which doesn't happen on this page, but see my Modern Western Square Dance page). When I started testing websites on smartphones in 2020 I realised that without a Home key the user has to do a lot of scrolling to get back to the top of a long page, so I changed the .T2 code in WebEdit to follow the heading with an up-arrow which the user could click to get back to the top. All I then had to do was regenerate the pages, and all of those with .T2 commands had the arrows — except for the first command which I assume is near the top of the page and therefore doesn't need one. I use a .TA marker to generate an HTML table from lines just containing fields separated by | signs, which is a lot quicker and easier than writing all the HTML myself. WebEdit also tells me if I have non-matching brackets or quotes in a paragraph, converts "quotes" to “smart quotes” and has many other useful features.

The other big difference is that most CMS's run on the server and the webmaster may have no idea how to upload web pages: every time the user clicks on a link the page is generated on the fly. I don't need that on my site, though I do use that approach on barndances.org.uk which picks up the selected dance from a database table. My pages are almost all static, so why go through the overhead of generating them afresh every time somebody wants to look at them. And it means I've been able to update this page over the course of a few weeks, view it in WebEdit as it will appear to the user, make corrections and improvements, give it to Renata to comment on, without anyone else being able to see the work in progress.

Originally this page was a CV to encourage people to employ me as a programmer, but I've given up on that (though I'm still available, don't get me wrong, just Contact me). However, I'd still like to tell you about some of the websites I've written (or rewritten). There were several others which no longer exist — such is the nature of websites.

- colinhume.com

Well of course I wrote my own site! It's mainly static, but I have a Feedback button at the foot of most pages to call a simple ASP script (about 250 lines of code) which updates and displays entries in an Access database and also sends off an email to me. An ASP script on my Home page provides a search facility which lists the pages containing the exact phrase the user entered followed by pages containing one or more of the words. Another ASP script provides a Search facility in my Instructions section so that you can put in a word or a few letters and it will find all the dance titles containing this string. There's some ASP.Net code so that when you click the “Music” button against one of the Dances in the Instructions section it pulls out the ABC code from a text file, modifies this somewhat, then runs programs to generate the musical notation in Portable Document Format (PDF) for the user to print out and in MIDI and MP3 format for the user to listen to. And if you click the “Print” button it pulls out the instructions for just that dance and prints them. - ???

I'm afraid I can't even remember the name of this one, so I can't show you an old version on archive.org! I was contacted by Charles Swabey, a retired barrister with a great interest in astrology. He'd used a strange program which let non-programmers create a flowchart and then run it. But he didn't know how to run this program from a server. In fact in those days you weren't allowed to run your own programs on shared hosting in case they corrupted other sites on the hosting space. He wanted to buy a server for me to maintain, but my contact in Clarkson Research said that was an enormous job, so instead I rewrote the program in ASP. It involved a lot of complicated calculations, to score the quality of any personal relationship by comparing their Sun signs, Moon signs, Chinese Year Animals, Chinese Astrology Lunar month animals, Numerology Life Path numbers, birth orders and Yin/Yang balance. It had two comprehensive compatibility tests — one using Western Astrology, and the other using only Chinese Astrology for Element Charts. It would predict the sex of any children from the union. It would also tell you how well you would do in various professions.

Charles had no idea how to market this. He had big ideas about running speed-dating meetings based on the program, and he was thinking of getting Susan Hampshire to do some adverts — but nothing happened. Originally it was free, and thousands of people were visiting the site — I thought “If only 1% of them pay up he'll be a rich man”. But they didn't: as soon as he started charging a very small amount the flow of visitors dried up. Eventually he suggested he would stop paying me and instead give me a quarter of the income, but as Renata pointed out, a quarter of nothing is nothing! The other problem was that he was constantly tampering with the site — I would go into it and find that the layout was all wrong because he'd been attacking it with Dreamweaver, which didn't have the built-in HTML validation that WebEdit does. Later he got some other programmers to build a smartphone app called AstroPal, but I see that's also been withdrawn. - availableplumbers.co.uk

A very straightforward static site, including a photo gallery. Currently not available — my client has renewed the domain for 10 years but never gave me the information necessary for me to link the domain name to the hosting space. - web.archive.

org/web/20180901233826/http:/ /manorparkkitchens.co.uk

Another similar one. Archived version because he hasn't paid me to renew the domain name. - Rhythms of the World — archived version

This is the version of the site that I worked on. At that time ROTW was the biggest free music festival in the UK. (The current version uses a CMS and is rubbish!) I didn't design the site, but I did a lot of work changing and improving it. I set up the Performers | Archive section using ASP and a database, but now I'm no longer involved with the site it's been changed to static text — which is a lot more work for the people who have to update it. - Kafoozalum — archived version

I didn't write the site originally, but I did a lot of tidying up and converting it to use CSS. - cyclamengardens.com

My wife Renata's site. It holds photos of her garden and some events held there over the years. - mlecs.com

The most complex site I've yet written — currently 7,477 lines of HTML, 20,106 lines of ASP, 1,684 lines of CSS and 518 lines in JavaScript files, making a total of 29,785 lines of code on the server, plus a database containing 27 tables. This belongs to Martin Stopford, whom I've mentioned several times. A complete course of Maritime Lectures, a comprehensive review of institutions which teach maritime courses, and quite a bit more.Martin is a charismatic speaker and a world authority on Maritime Economics: his book Maritime Economics is a best-seller now in its 3rd edition and he's currently (2020) working on the 4th edition. He travels all over the world giving seminars and lectures. But his management style is to bully his subordinates into submission — I wrote to him over 20 years ago telling him that this might work on his permanent employees but it didn't work on me. So we have a stormy relationship. He's forever changing his mind — for instance there are three different ways to upload an image to the server because at different times he was adamant that this was the way he wanted to do it. He praised my login screen repeatedly, and then suddenly told me it was amateurish and looked as if it had been thrown together in half an hour. And so on. Somehow we still manage to work together, but I don't know whether he's ever going to bring himself to advertise the site widely and get some users!

- catz.co.uk

Martin introduced me to John Doviak who was in charge of the Cambridge Academy of Transport which runs courses on maritime topics. John wasn't happy with the CMS for his website or the people who had built it. I rewrote the site using static HTML pages, plus code in WebEdit which allows John to update the list of courses on the Home page automatically by scanning the course pages. - mayheydays.org.uk

The original site was for Eastbourne Folk Festival and used a CMS. I converted it to straightforward HTML, and it now loads a lot quicker. - barndances.org.uk

Again the original site used a CMS. I rewrote it using PHP and a MySQL database, and it's now very easy for an administrator to edit and add dances. - folksales.com

A static site, but I had to do a lot of work to enable the owners to update the Stock page from their LibreOffice database. - barndances.org.uk/Antony

Antony Heywood's Database of well over 20,000 dances. Antony was looking for someone to take it over. Instead I changed some of his PHP and wrote a lot more of my own, with the result that he was happy to carry on updating it for sevceral years, though he's now handed it over to Dilip Sequeira. I also moved the site to hosting space paid for by CDSS, so the database should be around for a long time to come. - greenerywest.net

Michael Siemon created the site using a CMS no longer supported by Apple, and when he died Sharon Green had no way of adding her new dances to the site. I was horrified when I discovered this and offered to help. I wasn't able to get rid of the CMS for most of the site, but I got rid of it for the bits I needed to change and then wrote some front-end pages in PHP so that Sharon can add new dances and update her Home page and her Upcoming and Recent Gigs page without knowing anything about HTML.

Conclusion